Spatial Reasoning in Multimodal Large Language Models: A Survey of Tasks, Benchmarks and Methods

Abstract

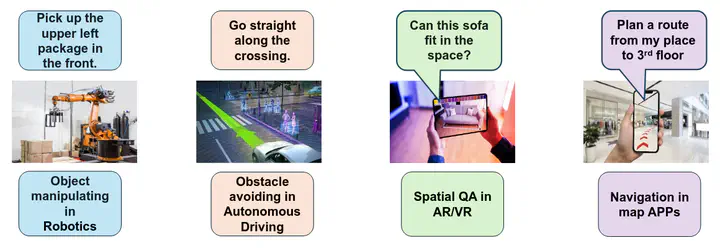

Spatial reasoning, which requires ability to perceive and manipulate spatial relationships in the 3D world, is a fundamental aspect of human intelligence, yet remains a persistent challenge for Multimodal large language models (MLLMs). While existing surveys often categorize recent progress based on input modality (e.g., text, image, video, or 3D), we argue that spatial ability is not solely determined by the input format. Instead, our survey introduces a taxonomy that organizes spatial intelligence from cognitive aspect and divides tasks in terms of reasoning complexity, linking them to several cognitive functions. We map existing benchmarks across text only, vision language, and embodied settings onto this taxonomy, and review evaluation metrics and methodologies for assessing spatial reasoning ability. This cognitive perspective enables more principled cross-task comparisons and reveals critical gaps between current model capabilities and human-like reasoning. In addition, we analyze methods for improving spatial ability, spanning both training-based and reasoning-based approaches. This dual perspective analysis clarifies their respective strengths, uncovers complementary mechanisms. By surveying tasks, benchmarks, and recent advances, we aim to provide new researchers with a comprehensive understanding of the field and actionable directions for future research.

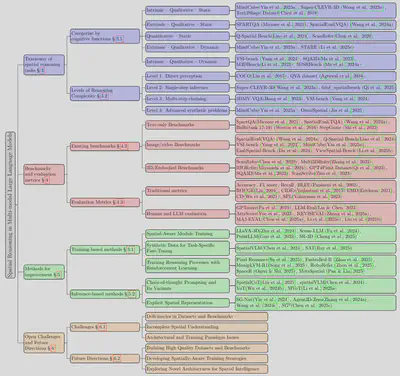

Survey Taxonomy

We introduce a cognitive taxonomy of spatial reasoning tasks, organizing them by function and reasoning complexity. We also map existing benchmarks, review evaluation metrics, and analyze training- and reasoning-based methods to improve spatial ability. The study highlights key gaps and future directions toward developing models with more human-like spatial intelligence.