ElasticTrainer: Speeding Up On-Device Training with Runtime Elastic Tensor Selection

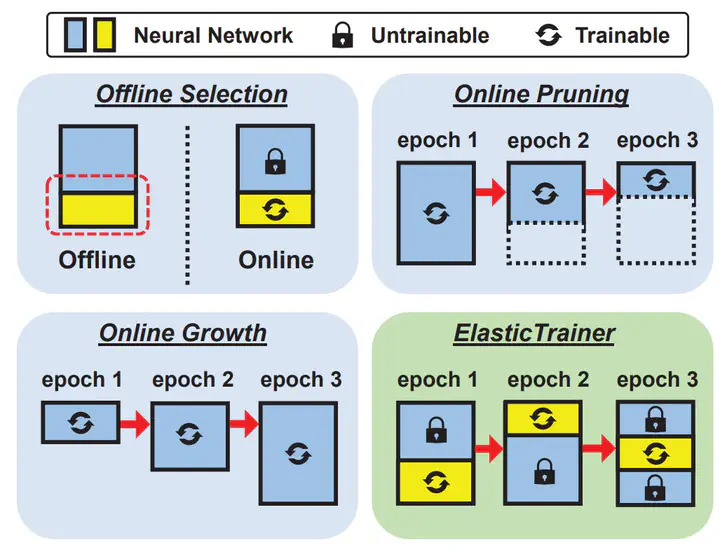

Existing work vs. ElasticTrainer

Existing work vs. ElasticTrainerAbstract

On-device training is essential for neural networks (NNs) to continuously adapt to new online data, but can be time-consuming due to the device’s limited computing power. To speed up on-device training, existing schemes select trainable NN portion offline or conduct unrecoverable selection at runtime, but the evolution of trainable NN portion is constrained and cannot adapt to the current need for training. Instead, runtime adaptation of on-device training should be fully elastic, i.e., every NN substructure can be freely removed from or added to the trainable NN portion at any time in training. In this paper, we present ElasticTrainer, a new technique that enforces such elasticity to achieve the required training speedup with the minimum NN accuracy loss. Experiment results show that ElasticTrainer achieves up to 3.5× more training speedup in wall-clock time and reduces energy consumption by 2×-3× more compared to the existing schemes, without noticeable accuracy loss.

Opportunities for on-device NN Training Speedup

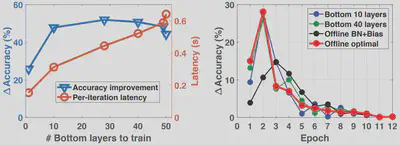

Since the pre-trained NN model has learned generic capabilities of extracting low-level features (e.g., color and texture information in images), on-device training only needs to be applied to some NN substructures and hence requires fewer training epochs and weight updates. As a result, we can potentially gain significant speedup by selecting a small trainable NN portion without losing much accuracy. When retraining a ResNet50 NN model with CUB-200 dataset using traditional transfer learning methods, selecting a NN portion with only 10 bottom layers in first 3 epochs measures similar validation accuracy than full retraining, but with a 2x training speedup. However, traditional learning methods, such as offline exhaustively searching, have their optimality quickly deterioates with training proceeds. This motivates us to transform to online and fully elastic selection of trainable NN portion.

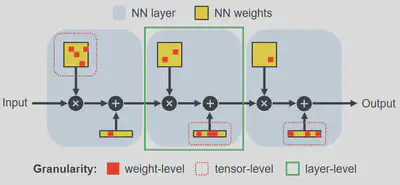

Granularity of Selection

The optimality of selecting the trainable NN portion is affected by the granularity of selection, which can be at the level of weights, tensors and layers on most NNs. ElasticTrainer adopts tensor-level selection, which ensures accuracy and can also be efficiently executed in existing NN frameworks without extra overhead.

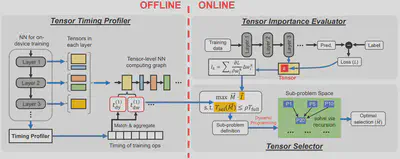

System Overview

ElasticTrainer’s design aims to select the optimal trainable NN portion at runtime, to achieve the desired training speedup with the maximum training loss reduction. In the offline stage, ElasticTrainer uses a Tensor Timing Profiler to profile the training times of selected tensors, to provide inputs for calculating $ T_{sel}(M) $. In the online stage, the system builds on an accurate yet computationally efficient metric that evaluates the aggregate importance of selected tensors and the corresponding reduction of training loss, and such evaluation is done by Tensor Importance Evaluator in ElasticTrainer design. The outputs of these two modules are used by Tensor Selector to solve the selection problem via dynamic programming.

Experimental Results

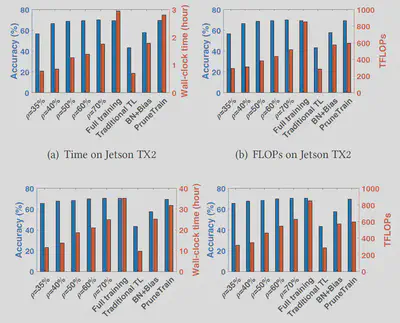

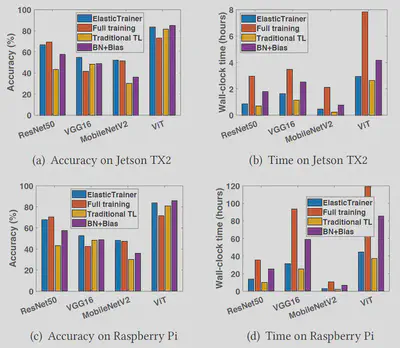

We implement ElasticTrainer on Nvidia Jetson TX2 and Raspberry Pi 4B embedded devices. We conduct extensive experiments with popular NN models (e.g., ResNet and Vision Transformer) and on difficult fine-grained image classification datasets (e.g., CUB-200 and Oxford-IIIT Pet).

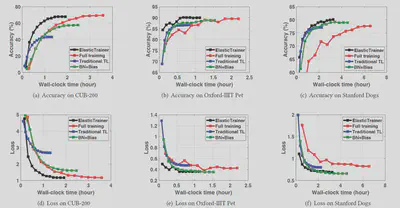

ElasticTrainer trains NN faster and converges to better accuracy!

ElasticTrainer can achieve up to 3.5x training speedup without noticeable accuracy loss!

ElasticTrainer can be applied to different NN model structures!

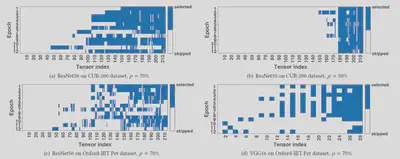

ElasticTrainer is indeed elastic at runtime! Its selected tensor shows elastic patterns!