Trustworthy AI

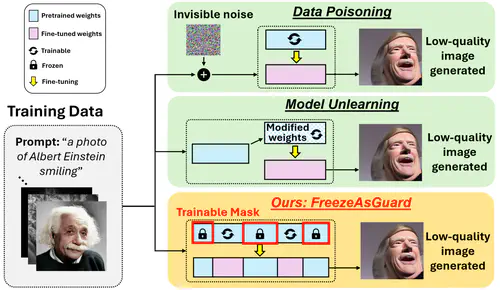

The versatility of recent emerging AI techniques also brings challenges in ensuring the AI systems to be safe, fair, explainable, and to cause no harm. Our research aims at discovering potential malicious adaptations to AI models, and propose protections and mitigations against unwanted model usages.