The Intelligent System Lab at University of Pittsburgh conducts pioneering research at the intersection between AI and computer systems. Our current research focuses on Multimodal Generative AI, On-device AI, Mobile and embedded systems, Mobile and connected health, Cyber-physical systems, Internet of Things, and more!

Our projects:

- Multimodal Generative AI

- On-device AI

- Trustworthy AI

- Mobile and Connected Health

- Mobile and Edge Computing Systems

- Intelligent Wireless Systems

Our tutorials:

Latest News

- Jul 2025: Our paper, ProGait: A Multi-Purpose Video Dataset and Benchmark for Transfemoral Prosthesis Users, has been accepted for publication at 2025 International Conference on Computer Vision (ICCV 2025).

- Jun 2025: Our paper, Data Can Speak for Itself: Quality-guided Utilization of Wireless Synthetic Data, has been accepted for publication at the ACM International Conference on Mobile Systems, Applications, and Services (MobiSys 2025) with the best paper award!

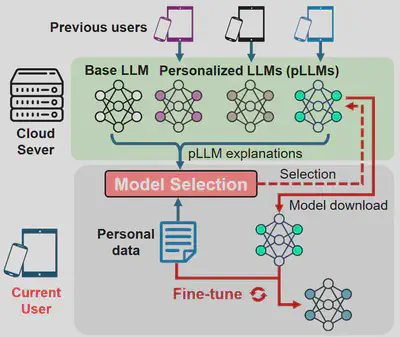

- Jun 2025: Our paper, Never Start from Scratch: Expediting On-Device LLM Personalization via Explainable Model Selection, has been accepted for publication at the ACM International Conference on Mobile Systems, Applications, and Services (MobiSys 2025).

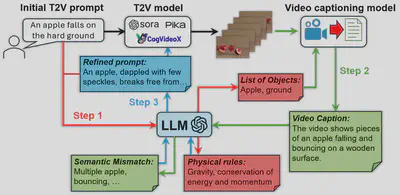

- Apr 2025: Our paper, PhyT2V: LLM-Guided Iterative Self-Refinement for Physics-Grounded Text-to-Video Generation, has been accepted for publication at the Conference on Computer Vision and Pattern Recognition 2025 (CVPR 2025).

- Dec 2024: Our paper, Tackling Intertwined Data and Device Heterogeneities in Federated Learning with Unlimited Staleness, has been accepted for publication at the 39th Annual Conference on Artificial Intelligence (AAAI 2025).

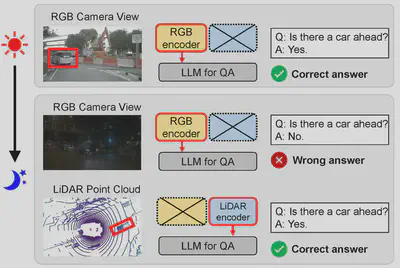

- Dec 2024: Two of our papers, When Device Delays Meet Data Heterogeneity in Federated AIoT Applications and Modality Plug-and-Play: Runtime Modality Adaptation in LLM-Driven Autonomous mobile Systems, have been accepted for publication at the 2025 ACM International Conference on Mobile Computing and Networking (MobiCom'25).

Multimodal Generative AI

Generative AI could revolutionize many current and emerging application and industry domains. In applications under real-world scenarios, rich data modalities other than text are being integrated into generative AI research to solve emerging challenges. Our research explores multimodal generative AI computation and unleash potentials of the current models.

Data Can Speak for Itself: Quality-guided Utilization of Wireless Synthetic Data

MobiSys 2025

PhyT2V: LLM-Guided Iterative Self-Refinement for Physics-Grounded Text-to-Video Generation

CVPR 2025

- Check our paper here.

- We have also released a Discord Bot which allows you to try our work with SOTA T2V models.

View more…

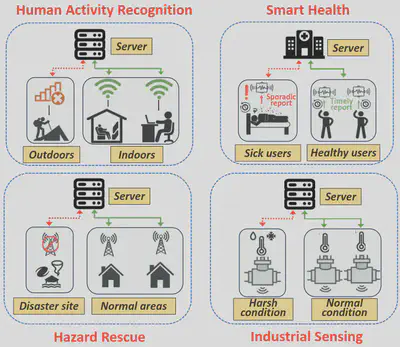

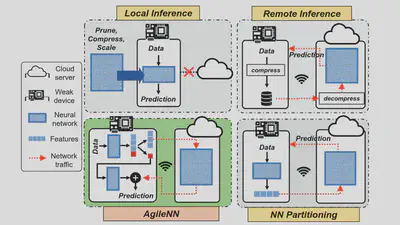

On-device AI

Our research aims to enable high-performance AI inference and training on resource-constrained mobile and embedded devices, to enable emerging applications such as AIoT, smart health and embodied AI. We utilize fine-grained and explainable knowledge about AI model execution to determine the most efficient part of the model for on-device training and inference, and employ modular neural networks that incorporate domain knowledge of specific system applications into the neural network module design. Our recent research focuses on enabling computational efficient inference and training of modern Large Language Models (LLMs) on weak devices, to efficiently incorporate these devices’ rich varieties of data modalities into the LLMs’ representation power and hence allow more flexible domain adaptation and model personalization.

Never Start from Scratch: Expediting On-Device LLM Personalization via Explainable Model Selection

MobiSys 2025

Modality Plug-and-Play: Runtime Modality Adaptation in LLM-Driven Autonomous Mobile Systems

MobiCom 2025

When Device Delays Meet Data Heterogeneity in Federated AIoT Applications

MobiCom 2025

Tackling Intertwined Data and Device Heterogeneities in Federated Learning with Unlimited Staleness

AAAI 2025

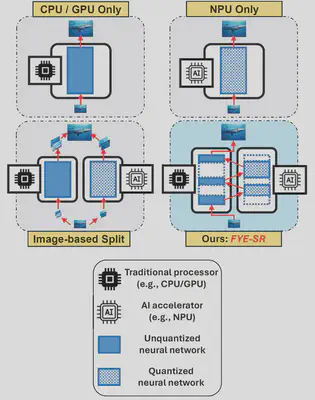

Perceptual-Centric Image Super-Resolution using Heterogeneous Processors on Mobile Devices

MobiCom'24

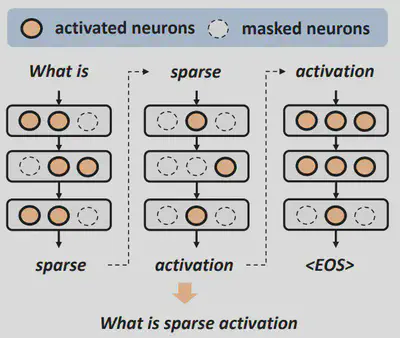

Achieving Sparse Activation in Small Language Models

ArXiv preprint

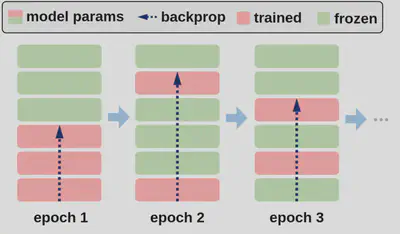

Towards Green AI in Fine-tuning Large Language Models via Adaptive Backpropagation

2024 ICLR

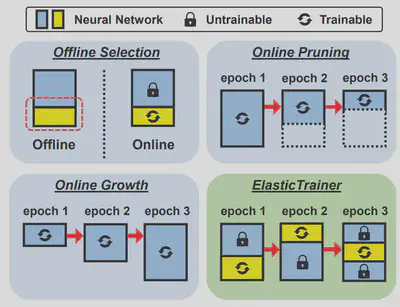

ElasticTrainer: Speeding Up On-Device Training with Runtime Elastic Tensor Selection

MobiSys'23

Real-time Neural Network Inference on Extremely Weak Devices: Agile Offloading with Explainable AI

MobiCom'22

View more…

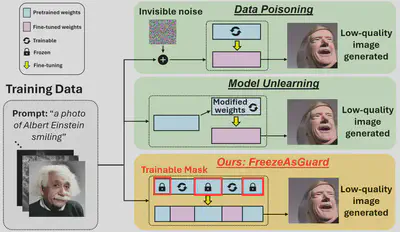

Trustworthy AI

The versatility of recent emerging AI techniques also brings challenges in ensuring the AI systems to be safe, fair, explainable, and to cause no harm. Our research aims at discovering potential malicious adaptations to AI models, and propose protections and mitigations against unwanted model usages.

FreezeAsGuard: Mitigating Illegal Adaptation of Diffusion Models via Selective Tensor Freezing

ArXiv preprint

View more…

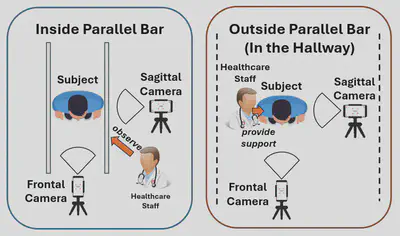

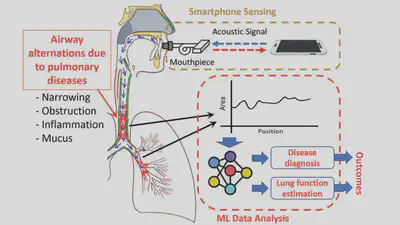

Mobile and connected health

Recent technical advances of sensing, computation and communication on mobile and embedded devices, such as smartphones and wearables, highlights the possibility of pervasive monitoring and unobtrusive diagnostics of various acute or chronic diseases, as convenient yet low-cost alternatives of medical-grade methods without any involvement of clinicians. Our research aims to fully unleash such potential of today’s mobile and embedded devices towards accurate, efficient yet cost-effective solutions to mobile and connected health, by employing modern AI tools and developing new AI algorithms to properly extract biomarkers from the mobile sensory data and provide sufficient interpretability to the extracted biomarkers. Currently, our integrated sensing and AI systems have been widely applied to various clinical applications including pulmonary telemedicine, post-discharge heart failure risk evaluation and mitigation, and orthopedic disease evaluation.

ProGait: A Multi-Purpose Video Dataset and Benchmark for Transfemoral Prosthesis Users

ICCV 2025

ProGait Dataset

Our ProGait dataset aims to support multiple vision tasks on prosthesis users, including Video Object Segmentation, 2D Human Pose Estimation, and Gait Analysis. Check our dataset page for more information.

PTEase: Objective Airway Examination for Pulmonary Telemedicine using Commodity Smartphones

MobiSys'23

Acoustic Waveform Respiratory Evaluation (AWARE) Dataset

Our AWARE dataset consists of a group of human airway measurements, produced by our integrated AI and sensing systems for smart pulmonary telemedicine. The PTEase paper makes use of the AWARE dataset.

SpiroSonic: Monitoring Human Lung Function via Acoustic Sensing on Commodity Smartphones

MobiCom'20

View more…

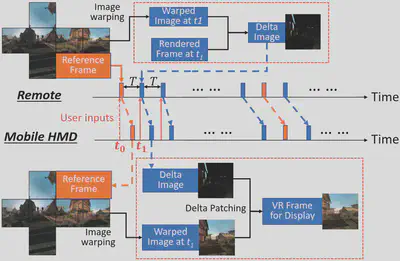

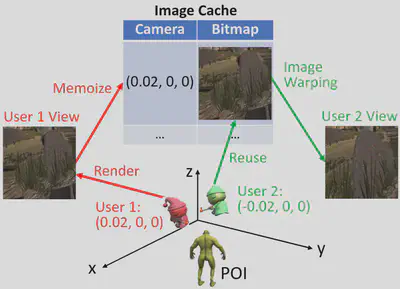

Mobile and Edge Computing Systems

Edge computing remains a viable solution in task offloading to balance between network latency and computational power. Our research focuses on the co-design between mobile and edge systems to achieve better efficiency on mobile applications with heavy workload, such as mobile VR rendering.

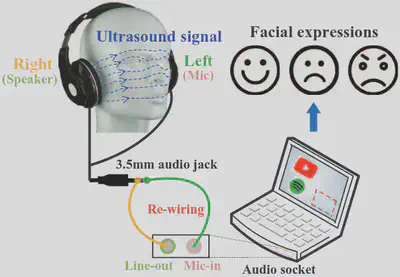

FaceListener: Recognizing Human Facial Expressions via Acoustic Sensing on Commodity Headphones

IPSN'22

Eavesdropping User Credentials via GPU Side Channels on Smartphones

ASPLOS'22

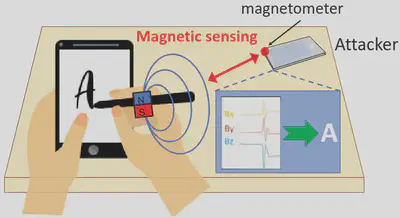

MagHacker: eavesdropping on stylus pen writing via magnetic sensing from commodity mobile devices

MobiSys'20

DeltaVR: achieving high-performance mobile VR dynamics through pixel reuse

IPSN'19

MUVR: Supporting Multi-User Mobile Virtual Reality with Resource Constrained Edge Cloud

2018 IEEE SEC

View more…

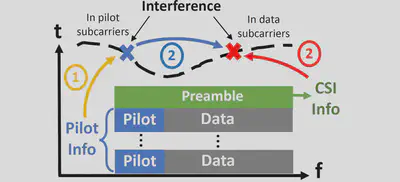

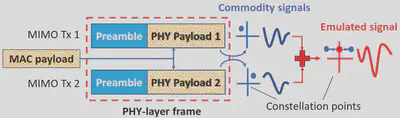

Intelligent Wireless Systems

Wireless communications, such as Wi-Fi, Bluetooth and Zigbee, play an important role in IoT and mobile application. However, the noisy wireless channel conditions and interference makes such communication less effective. Our research focuses on physical layer designs, and apply AI-assisted techniques for intereference cancellation and efficiency improvement.

AiFi: AI-Enabled WiFi Interference Cancellation with Commodity PHY-Layer Information

SenSys'22

TransFi: emulating custom wireless physical layer from commodity wifi

MobiSys'22

View more…